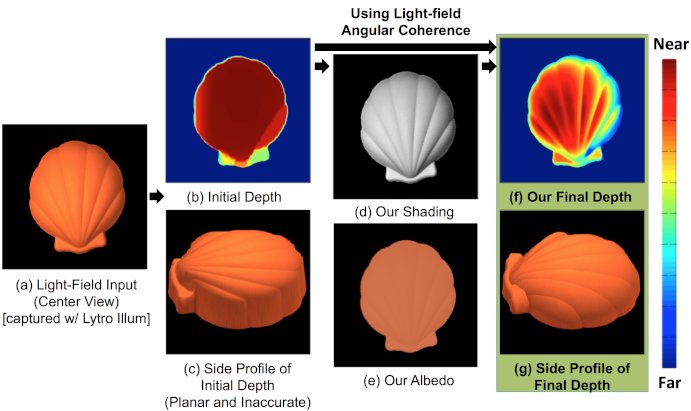

Light-field cameras are able to capture both spatial and angular data, suitable for refocusing. By locally refocusing each spatial pixel to its respective estimated depth, we produce an all-in-focus image where all viewpoints converge onto a point in the scene. Therefore, the angular pixels have angular coherence, which exhibits three properties: photo consistency, depth consistency, and shading consistency. We propose a new framework that uses angular coherence to optimize depth and shading. The optimization framework estimates both general lighting in natural scenes and shading to improve depth regularization. Our method outperforms current state-of-the-art light-field depth estimation algorithms in multiple scenarios, including real images.

Michael Tao, Pratul Srinivasan, Jitendra Malik, Szymon Rusinkiewicz, and Ravi Ramamoorthi.

"Depth from Shading, Defocus, and Correspondence Using Light-Field Angular Coherence."

Computer Vision and Pattern Recognition (CVPR), June 2015.

@inproceedings{Tao:2015:DFS,

author = "Michael Tao and Pratul Srinivasan and Jitendra Malik and Szymon

Rusinkiewicz and Ravi Ramamoorthi",

title = "Depth from Shading, Defocus, and Correspondence Using Light-Field

Angular Coherence",

booktitle = "Computer Vision and Pattern Recognition (CVPR)",

year = "2015",

month = jun

}