A𝛿: Autodiff for Discontinuous Programs – Applied to Shaders

ACM SIGGRAPH, to appear, August 2022

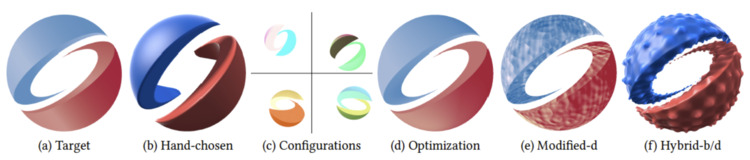

Our compiler automatically differentiates discontinuous shader programs by extending reverse-mode automatic differentiation (AD) with novel differentiation rules. This allows efficient gradient-based optimization methods to optimize program parameters to best match a target (a), which is difficult to do by hand (b). Our pipeline takes as input a shader program initialized with configurations (c) that look very different from the reference, and converges to be nearly visually identical (d) within 15s. The compiler can also output the shader program with optimized parameters to GLSL, which allows programmers to interactively edit or animate the shader, such as adding texture (e). The optimized parameters can also be combined with other shader programs (e.g. b) to leverage their visual appearance while keeping the geometry close to the reference. For animation results please refer to our supplemental video.

Abstract

Over the last decade, automatic differentiation (AD) has profoundly impacted graphics and vision applications — both broadly via deep learning and specifically for inverse rendering. Traditional AD methods ignore gradients at discontinuities, instead treating functions as continuous. Rendering algorithms intrinsically rely on discontinuities, crucial at object silhouettes and in general for any branching operation. Researchers have proposed fully-automatic differentiation approaches for handling discontinuities by restricting to affine functions, or semi-automatic processes restricted either to invertible functions or to specialized applications like vector graphics. This paper describes a compiler-based approach to extend reverse mode AD so as to accept arbitrary programs involving discontinuities. Our novel gradient rules generalize differentiation to work correctly, assuming there is a single discontinuity in a local neighborhood, by approximating the pre-filtered gradient over a box kernel oriented along a 1D sampling axis. We describe when such approximation rules are first-order correct, and show that this correctness criterion applies to a relatively broad class of functions. Moreover, we show that the method is effective in practice for arbitrary programs, including features for which we cannot prove correctness. We evaluate this approach on procedural shader programs, where the task is to optimize unknown parameters in order to match a target image, and our method outperforms baselines in terms of both convergence and efficiency. Our compiler outputs gradient programs in both TensorFlow (for quick prototypes) and Halide with an optional auto-scheduler (for efficiency). The compiler also outputs GLSL that renders the target image, allowing users to modify and animate the shader, which would otherwise be cumbersome in other representations such as triangle meshes or vector art.

Links

- Paper (6MB Preprint)

- Video: Short (4 min)

- Video: Long (15 min)

- Video: Live Demo (25 min)

- Github (Adelta) (New: PyTorch backend)

Medium Articles

- Introduction Part 1: Math Basics

- Introduction Part 2: DSL

- Tutorial Part 1: Differentiating a Simple Shader Program

- Tutorial Part 2: Raymarching Primitive

- Tutorial Part 3: Animating the SIGGRAPH logo

- Tutorial Part 4: Animating the Celtic Knot

Citation

Yuting Yang, Connelly Barnes, Andrew Adams, and Adam Finkelstein.

"A𝛿: Autodiff for Discontinuous Programs – Applied to Shaders."

ACM SIGGRAPH, to appear, August 2022.

BibTeX

@inproceedings{Yang:2022:AAF,

author = "Yuting Yang and Connelly Barnes and Andrew Adams and Adam Finkelstein",

title = "A$\delta$: Autodiff for Discontinuous Programs – Applied to Shaders",

booktitle = "ACM SIGGRAPH, to appear",

year = "2022",

month = aug

}