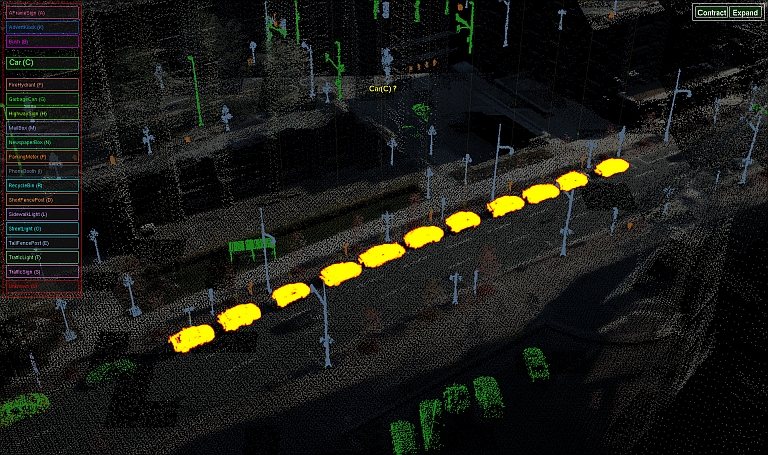

The goal of this work is to design an interface that streamlines the process of labeling objects in large 3D point clouds. Since automatic methods are inaccurate and manual annotation is tedious, this work assumes the necessity of every object’s label being verified by the annotator, yet puts the effort required from the user to accomplish the task without loss of accuracy at the center stage.

Inspired by work done in related fields of image, video and text annotation, techniques used in machine learning, and perceptual psychology, this work offers and evaluates three interaction models and annotation interfaces for object labeling in 3D LiDAR scans. The first interface leaves the control over the annotation session in the user’s hands and offers additional tools, such as online prediction updates, group selection and filtering, to increase the throughput of the information flow from the user to the machine. In the second interface, the non-essential yet time consuming tasks (e.g., scene navigation, selection decisions) are relayed onto a machine by employing an active learning approach to diminish user fatigue and distraction by these non-essential tasks. Finally, a third hybrid approach is proposed—a group active interface. It queries objects in groups that are easy to understand and label together thus aiming to achieve advantages offered by either of the first two interfaces. Empirical evaluation of this approach indicates an improvement by a factor of 1.7 in annotation time compared to other methods discussed without loss in accuracy.

Aleksey Boyko.

"Efficient Interfaces for Accurate Annotation of 3D Point Clouds."

PhD Thesis, Princeton University, February 2015.

@phdthesis{:2015:EIF,

author = "Aleksey Boyko",

title = "Efficient Interfaces for Accurate Annotation of {3D} Point Clouds",

school = "Princeton University",

year = "2015",

month = feb

}