Dynamic Hair Capture

Princeton University, August 2011

Abstract

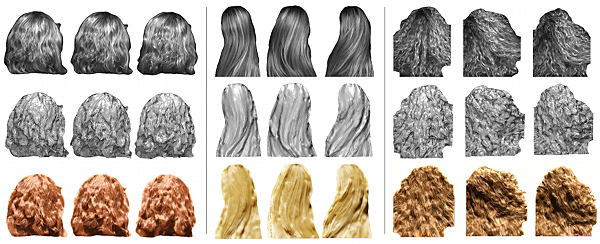

The realistic reconstruction of hair motion is challenging because of hair's complex occlusion, lack of a well-defined surface, and non-Lambertian material. We present a system for passive capture of dynamic hair performances using a set of high-speed video cameras. Our key insight is that, while hair color is unlikely to match across multiple views, the response to oriented filters will. We combine a multi-scale version of this orientation-based matching metric with bilateral aggregation, a MRF-based stereo reconstruction technique, and algorithms for temporal tracking and de-noising. Our final output is a set of hair strands for each frame, grown according to the per-frame reconstructed rough geometry and orientation field. We demonstrate results for a number of hair styles ranging from smooth and ordered to curly and messy.

Paper

Video

- MOV (235 MB)

Links

- Princeton CS tech report

- Project page by Hao Li

Citation

Linjie Luo, Hao Li, Thibaut Weise, Sylvain Paris, Mark Pauly, and Szymon Rusinkiewicz.

"Dynamic Hair Capture."

Technical Report TR-907-11, Princeton University, August 2011.

BibTeX

@techreport{Luo:2011:DHC,

author = "Linjie Luo and Hao Li and Thibaut Weise and Sylvain Paris and Mark

Pauly and Szymon Rusinkiewicz",

title = "Dynamic Hair Capture",

institution = "Princeton University",

year = "2011",

month = aug,

number = "TR-907-11"

}